SpaCy GPU

Set Up Environment

It's relatively easy to use SpaCy with a GPU these days.

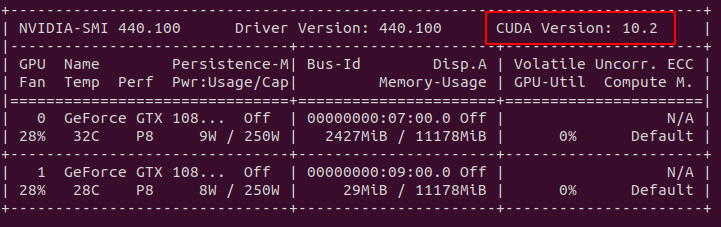

First set up your conda environment and install cudatoolkit (use nvidia-smi to match versions of the tookit with the drivers):

Run nvidia-smi:

Create conda env:

conda create -n test python=3.8

conda activate test

conda install pytorch cudatoolkit=10.2 -c pytorch

Installing SpaCy

Now install spacy - depending on how you like to manage your python environments either carry on using conda for everything or switch to your preferred package manager at this point.

conda install -c conda-forge spacy cupyor

pdm add 'spacy[cuda-autodetect]'

Download Models

Download a spacy transformer model to make use of your GPU/CUDA setup:

python -m spacy download en_core_web_trf

Using GPU

As soon as your code loads you should use the prefer_gpu() or require_gpu() functions to tell spacy to load cupy then load your model:

import spacy

spacy.require_gpu()

nlp = spacy.load('en_core_web_trf')Now you can use the model to do some stuff

doc = nlp("My name is Wolfgang and I live in Berlin")

for ent in doc.ents:

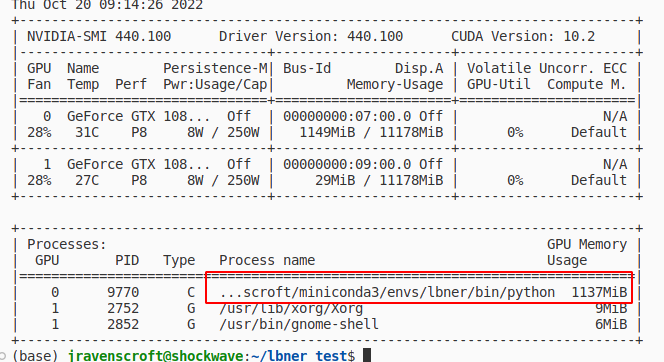

print(ent.text, ent.label_)You can check that the GPU is actually in use with nvidia-smi:

Also if you try to use transformer models without a GPU it will hang for AGES and max out your CPUs - another tell that something's not quite right.